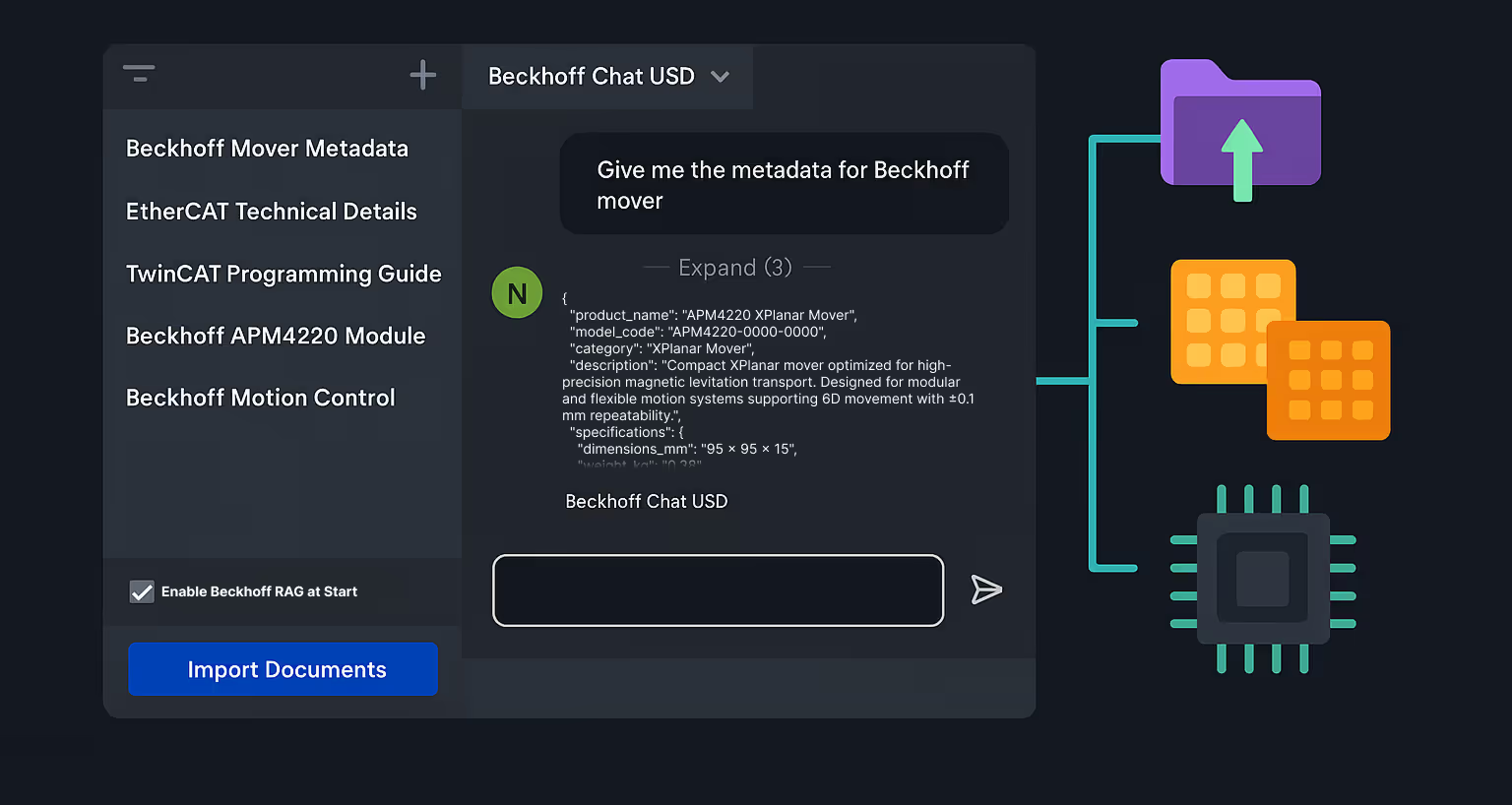

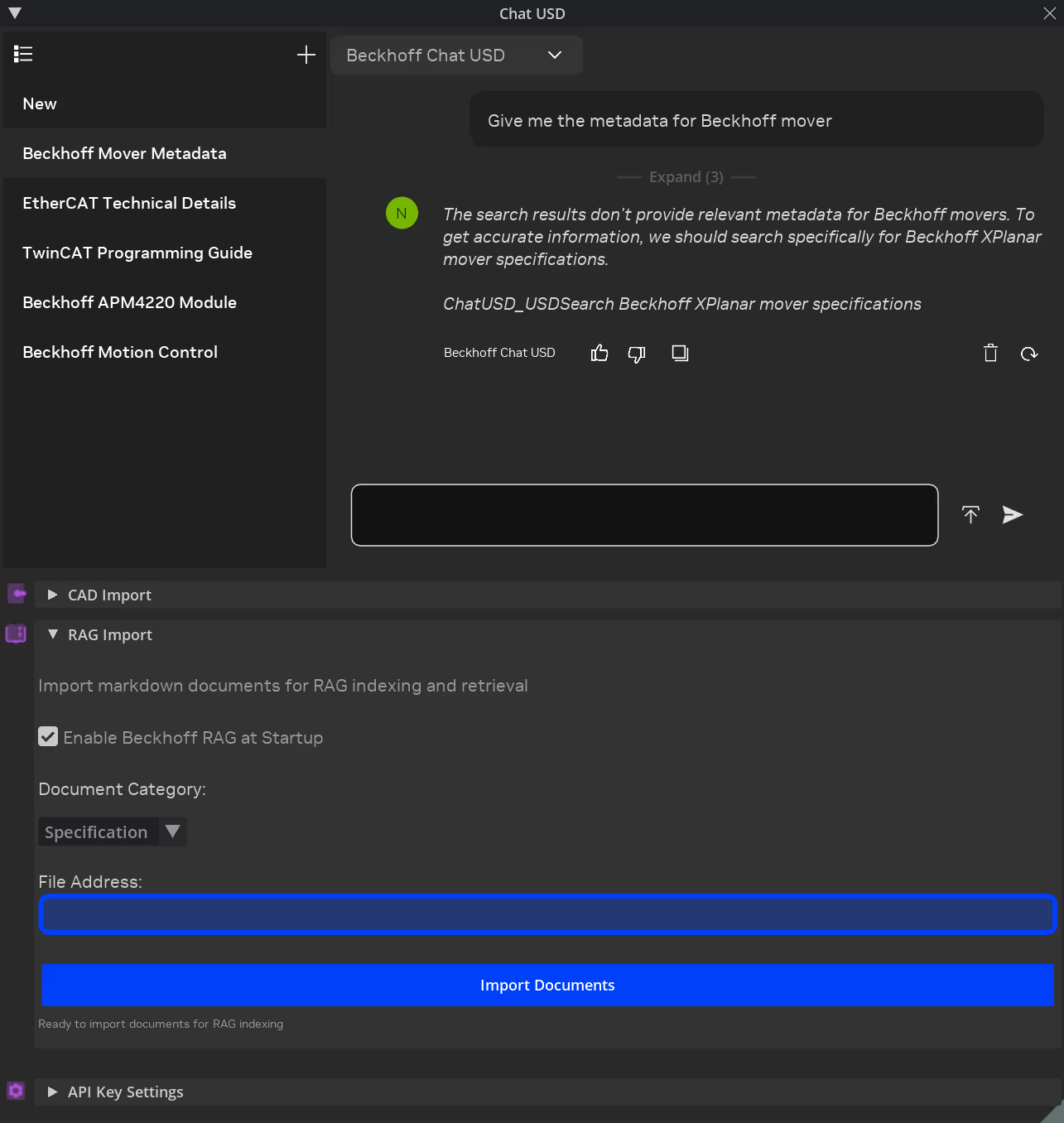

Before diving into the technical implementation, we solved a key usability challenge — enabling non-technical users to expand the AI’s knowledge base without code.

The solution was the Drag-and-Drop RAG Import Widget, built directly into the Chat USD interface. With it, engineers can update Beckhoff documentation inside Omniverse in under a minute.

How it works:

This workflow simplifies data management and keeps the assistant aligned with the latest Beckhoff product information.

To make this possible, we developed a Retrieval-Augmented Generation (RAG) pipeline optimized for Beckhoff’s technical documentation. The system indexes and retrieves domain-specific data for precise, low-latency responses.

A custom ingestion script (beckhoff_markdown_ingestion.py) extracts metadata such as part numbers, model codes, and specifications. The content is segmented using a character-based splitter tuned for technical writing to preserve hierarchy and context.

Configuration highlights:

nv-embedqa-e5-v5 model via AI Endpointsbeckhoff_docs in the USD Agent core moduleThis architecture allows the system to retrieve relevant technical data in milliseconds — directly from Beckhoff documentation, not the broader USD knowledge base.

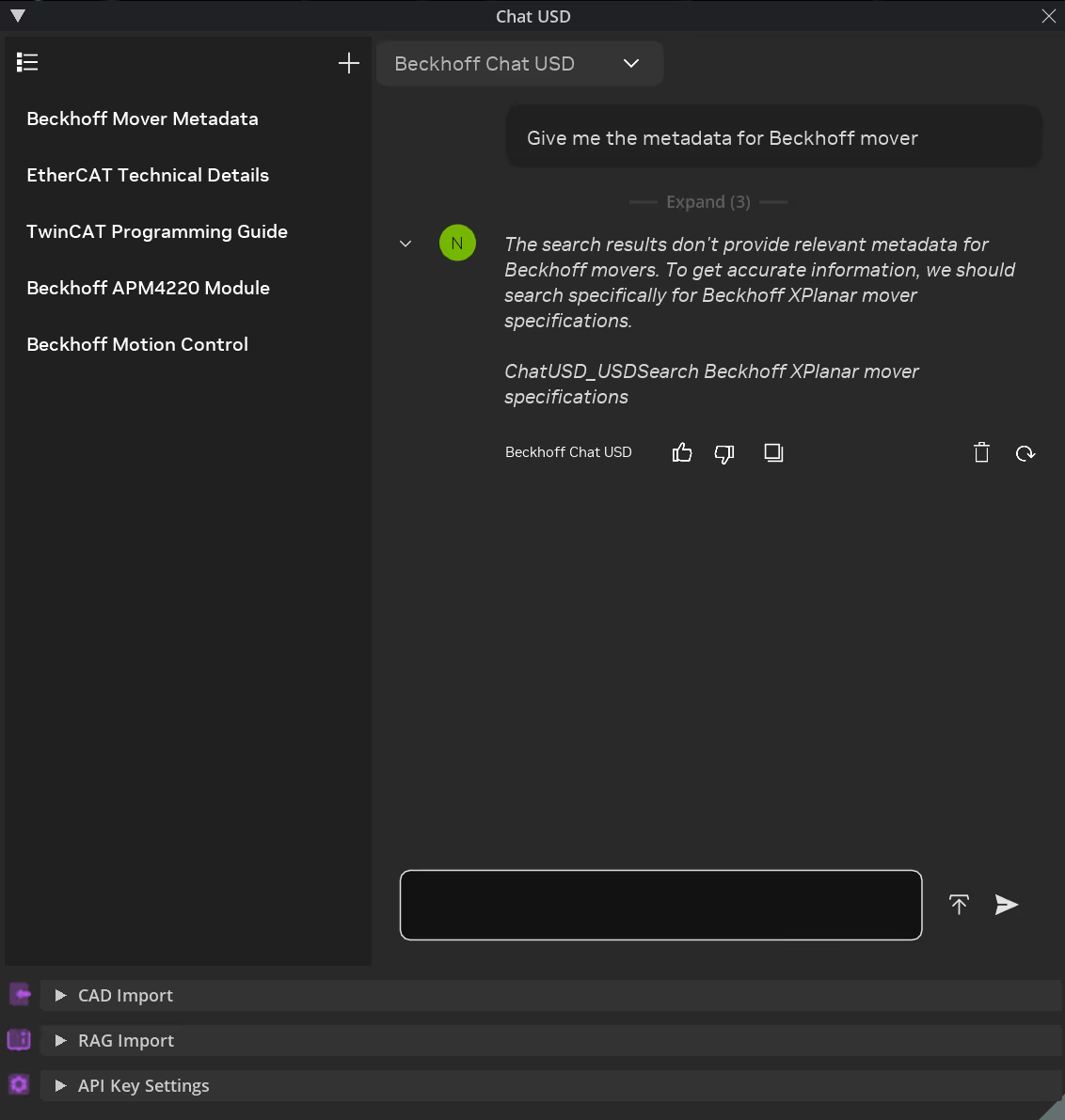

We developed a dedicated Chat USD model called “Beckhoff Chat USD”, which operates entirely on Beckhoff data within Omniverse.

Unlike the standard Chat USD agent, this version removes connections to general USD search modules and relies solely on the Beckhoff RAG retriever. This ensures every response is based exclusively on verified Beckhoff documentation — delivering brand-accurate and context-specific results for automation engineers.

Key improvements:

Why we did this:

Industrial automation data is highly specialized. By isolating the model, we ensured engineers receive responses grounded in Beckhoff’s ecosystem — accurate, reproducible, and consistent across sessions.

To reinforce model isolation, we created a Beckhoff-specific configuration in extension.toml. This ensures the system retrieves data solely from the Beckhoff retriever while disabling other knowledge sources.

Configuration highlights:

beckhoff_only_mode = trueprimary_retriever = "beckhoff_docs"beckhoff_priority = 1.0other_retrievers_priority = 0.0This approach improves consistency, reduces noise, and optimizes retrieval latency — ensuring every query remains focused on Beckhoff’s data structure and terminology.

To extend the system’s practical value, we added a custom metafunction that automates XPlanar tile placement using natural language input.

Example:

“Create a 10×10 grid of XPlanar tiles with 2 cm gaps.”

The function place_xplanar_tiles() supports multiple layout patterns — grid, checkerboard, U-shape, L-shape, corridor, and others — and three instancing modes (duplicate, reference, and point instancer). It automatically generates parameterized layouts with validation, optimizing scene setup for simulation and visualization.

This enables rapid scene prototyping and interactive planning of XPlanar systems without manual modeling.

| ⚙️ **Metric** | 📊 **Value** |

|----------------|---------------|

| ⚡ **Retrieval Latency** | <500 ms per query |

| 💾 **Index Size** | ~2–3 MB (178 chunks) |

| 🧮 **Embedding Dimensionality** | 768 (NV-Embed-QA) |

| 🧠 **LLM Context Window** | 128 k tokens (Llama 4 Maverick) |

| 🔍 **Top-K Retrieval** | 15 chunks (≈7,500 characters avg.) |

| 📈 **Similarity Threshold** | 0.7 (cosine similarity) |

| 🧩 **Metafunction Retrieval** | 3,114 indexed functions |

The integration delivers:

If you’re exploring ways to make your simulation or automation workflows more intelligent, Champion IO’s Beckhoff integration demonstrates what’s possible when domain-specific knowledge meets GPU-native AI. Our work with NVIDIA’s USD Agent SDK is just the beginning — bringing real-time, context-aware intelligence directly into Omniverse.

👉 Get in touch to see how we can adapt this architecture to your automation or simulation environment.

Author:

Amir Tamadon

Co-founders & CTO, Champion IO

Engineering better ways to think in 3D.