Learn how to use ROS 2 bags to record and replay topic data. Perfect for debugging, simulation, and generating repeatable training datasets.

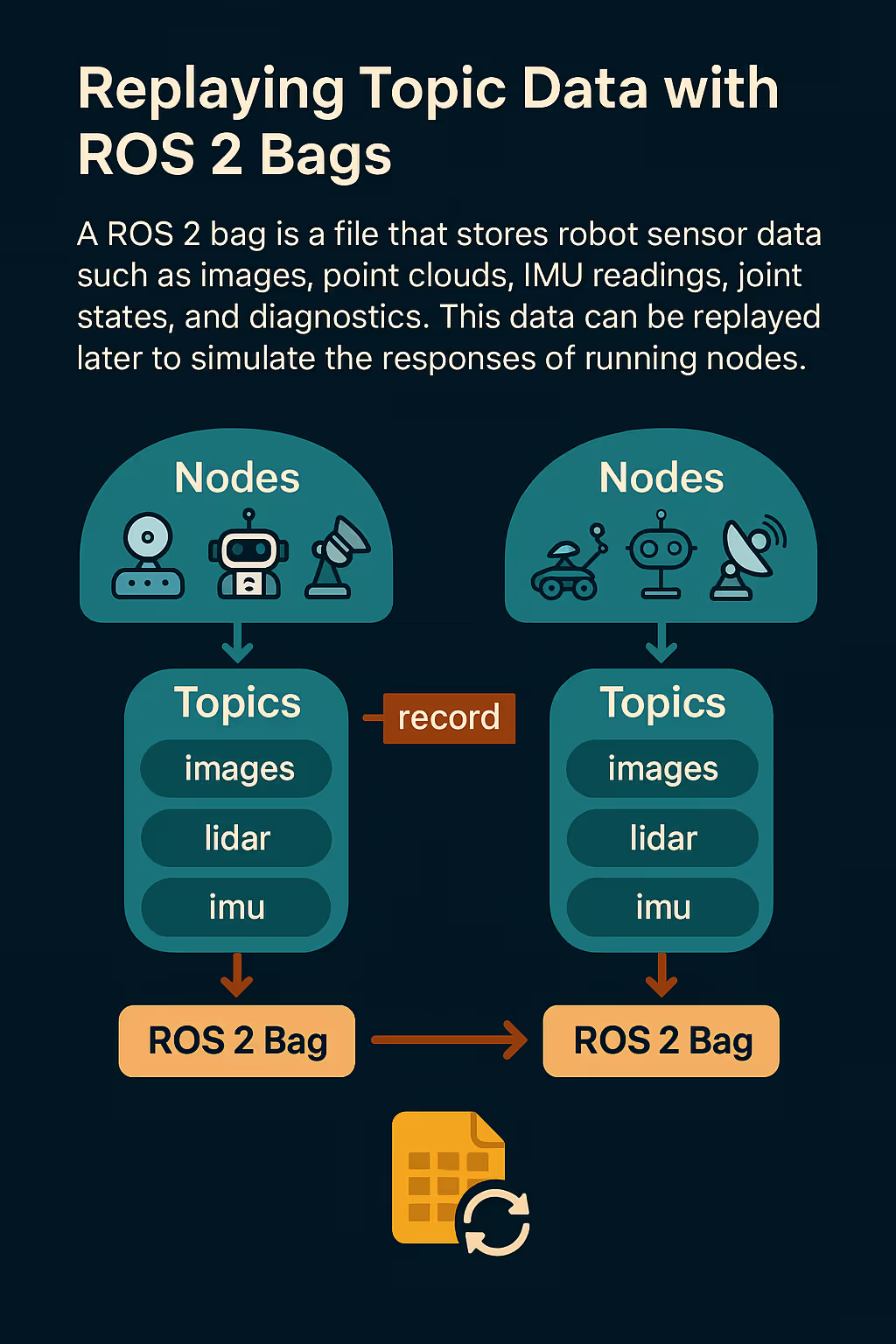

When building robots, things rarely go perfectly on the first try. You might see unexpected behavior, missed sensor data, or strange control outputs—and without a way to capture what happened, it’s hard to debug. That’s where ROS 2 bags come in. A bag file is like a time machine for your robot: it records everything being published on ROS 2 topics, so you can pause, rewind, and replay your robot’s data as if it were happening live.

In simulation environments like NVIDIA Isaac Sim, bag files are especially powerful. They allow you to record simulated runs—LiDAR scans, camera images, robot states—and then replay those exact conditions to test new algorithms or train AI models without re-running the full physics simulation. Whether you're debugging motion planners or benchmarking perception models, ROS 2 bags help you make the most of every simulation pass.

🧱 Why USD Matters When Using ROS 2 Bags in Isaac Sim

- Scene Reproducibility

ROS 2 bags record the data flow, but not the visual or physical scene. USD captures the full 3D scene graph — all objects, their positions, physics properties, sensors, and lighting setups. By saving both the USD stage and the bag file, you get a fully reproducible test environment: what the robot saw, what it did, and what the world looked like at that time. - Versioned Simulation Contexts

If you’re iterating on simulation logic, robot geometry, or sensor layouts, USD lets you version and compare scenes. Combine this with bags to replay the same motion or perception data across multiple USD variants for testing or benchmarking. - Multi-pass AI Training

For perception tasks, you can use USD to dynamically modify the environment (lighting, object placement, materials), then replay the same ROS 2 bag to generate diverse datasets from the same motion path. This is ideal for synthetic data generation pipelines. - Sim-to-Real Bridging

USD + bags allow you to record a real robot's behavior, then recreate the same scenario in Isaac Sim by placing USD props in matching positions and playing the bag. This is a foundational technique for sim-to-real validation. - Metadata Anchoring

USD supports custom metadata and annotations, which can be linked to events or sensor outputs stored in the ROS 2 bag (e.g., tagging when a collision occurred or when a specific object entered the field of view).